How to Choose Predictive Models Like an Expert: Simple Decision Framework

Did you know that predictive modeling has become a core competency for high-performing marketing and revenue teams?

The right predictive models help us make better decisions and develop effective strategies based on historical data. But choosing from numerous available options can feel overwhelming.

Predictive analytics models combine historical data, statistical algorithms, and machine learning techniques to predict future outcomes. These models use past information to forecast future events that enable businesses to make proactive, informed decisions.

A simple yet powerful decision framework will help you select the most appropriate predictive modeling techniques for your specific business needs confidently. This piece guides you through different types of predictive models to make choices that maximize your competitive advantage, whether you’re new to predictive modeling methods or want to refine your approach.

Understand the Role of Predictive Modeling

Predictive modeling is a powerful way for businesses to make informed decisions. Let’s understand what predictive modeling means and why picking the right model can affect your results by a lot.

What is predictive modeling?

Predictive modeling uses statistics and historical data to forecast future outcomes or unknown events. These events usually happen in the future, but predictive modeling works for any unknown event, whatever time it occurred. The core idea is simple – predictive modeling creates mathematical patterns from existing data to predict likely outcomes in new situations.

This approach combines statistical techniques with machine learning to turn raw historical data into valuable insights. These models look at past data to find patterns, spot trends, and use that information to predict what might happen next.

Businesses often call predictive modeling “predictive analytics”. People use these terms interchangeably to describe how companies use predictions. The field also shares a lot with machine learning, especially in academic research.

These models work amazingly fast and can give results right away real-time. Banks and stores can check risk levels instantly when someone applies online for a mortgage or credit card. Companies can make quick, smart decisions that would take too long to figure out by hand.

How it is different from descriptive and prescriptive analytics

The value of predictive modeling becomes clear when we look at the bigger analytics picture. Descriptive analytics tells you “What happened?” by looking back at data. Predictive analytics answers “What could happen?” based on past patterns.

Your company’s current situation becomes clear through descriptive analytics of past data. Predictive analytics shows where things might go by spotting likely future outcomes. Both types of analytics play their own important roles in a detailed strategy.

Prescriptive analytics goes one step beyond predictions. While predictive modeling shows possible outcomes, prescriptive analytics tells you what steps to take to get the results you want. Think of it this way – predictive analytics tells you what might happen, while prescriptive analytics suggests what you should do about it.

In spite of that, predictive analytics has its limitations. Random events or unexpected situations can throw off predictions because the analysis depends on past data. The predictions work only as well as the data you feed them.

Why model selection matters

Picking the right predictive model can make or break your analytics trip. Each model works best for specific tasks and gives different results based on your business question, how your data looks, and what you want to achieve.

The model selection process determines how well your machine learning system performs. Each type of model has its strong points and weak spots that directly affect how well your project works. To name just one example, see how some tasks need complex models to handle big data sets with lots of details, while others work better with simpler, focused models.

Poor model choice can lead to several problems:

- Overfitting: A model that works great with training data but fails with new information

- Underfitting: A model too basic to spot important patterns

- Wasted resources: Complex models need more computing power, time, and data

- Wrong insights: Bad models give unreliable predictions

You probably won’t find the best model for your problem without trying a few options. Some problems might point to specific types of models (like deep learning for processing language), but finding the right fit usually means testing different approaches and comparing how they work.

The best model balances several things: how well it performs, how complex it is, how easy it is to understand, and what the business needs. Even the most statistically perfect model won’t help if people can’t understand it or if it doesn’t match what the business wants to achieve.

Key Steps Before Choosing a Model

Building a predictive model needs careful preparation. Let’s get into the groundwork you need before picking the right model.

Define your prediction goal

A clear objective forms the foundations of any predictive modeling project. You need to state exactly what you want to predict and why. Your choice of model and how you measure its success depends on this clarity.

These basic elements matter when you set your prediction goal:

- Target population – who the model will predict for

- Outcome of interest – what needs prediction

- Setting where the model will work – primary care, research, or other contexts

- Intended users – who will use the model’s predictions

- Decisions the model will inform – how predictions affect decision-making

A well-defined objective guides every step in your modeling trip. Models might fail to deliver real business value without this clarity. Your goal statement should measure success yet stay practical enough.

Collect and clean historical data

Getting and preparing relevant historical data comes next. The best predictive models use data from prospective cohort studies designed for this purpose. Many teams also use existing datasets from cohort studies or randomized clinical trials.

Data cleaning affects model performance directly. You must modify or remove wrong, incomplete, badly formatted, or duplicate information. The old saying rings true: “garbage in, garbage out.” Even the best algorithms can’t fix bad data.

This phase needs you to:

- Look for missing values that might show less useful predictors

- Fix measurement errors in predictors and outcomes

- Take out observations with zero or near-zero variance

- Deal with duplicate records from multiple data sources

- Fix formats and naming inconsistencies

Good data preprocessing takes up 80% of project time. This work pays off through better model accuracy and reliability. Clean data helps your model find real patterns instead of magnifying noise or bias.

Identify relevant features

Picking the right variables (features) comes last in preparation. Feature selection helps your model work better by finding the most vital and unique variables in your dataset.

Good feature selection offers key benefits:

- Models work better without irrelevant features

- Less overfitting helps models work with new data

- Training runs faster with lower computing costs

- Results become easier to understand

Three main ways exist to select features:

- Filter methods: use statistical tests like correlation analysis

- Wrapper methods: test feature combinations through training and measuring results

- Embedded methods: combine feature selection with model training

Check how features relate to each other before finalizing your set. Features that relate too closely create redundancy (multicollinearity). Picking one feature from related groups often works best since others add little value.

Feature engineering can boost predictive power significantly. This process turns raw data into meaningful features that show underlying patterns. Well-engineered features often help more than picking the right algorithm.

These foundation steps help you make smart choices about model selection. This preparation builds a solid base for advanced predictive analytics models.

Types of Predictive Models and When to Use Them

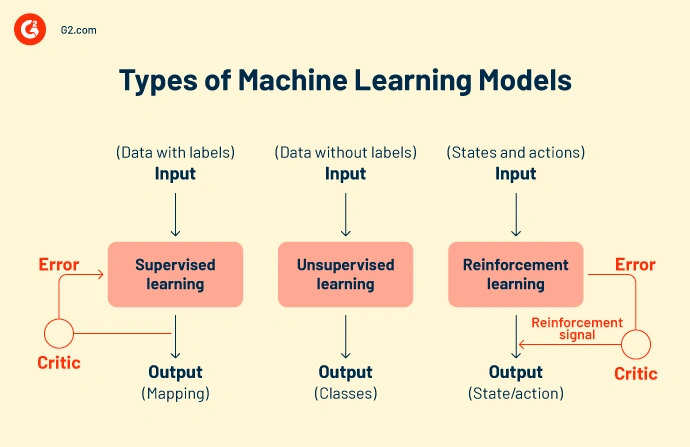

Image Source: G2

The best predictive model choice starts with a good grasp of your available options. Each model type shines in specific scenarios and brings its own advantages to the table. Let’s head over to the main categories of predictive models and learn the best times to use them.

Regression models for continuous outcomes

Regression models provide the quickest way to predict specific numerical values. Linear regression forms the foundations of predictive analytics. It determines relationships between variables and helps predict continuous values such as sales figures, prices, or test scores. This method works best with linear relationships between inputs and outputs.

Complex scenarios call for polynomial regression that handles non-linear relationships between variables. Logistic regression, though named similarly, actually works as a classification tool. It uses Maximum Likelihood Estimation instead of least squares to transform dependent variables.

Regression models are your best bet when you:

- Just need to predict specific numerical values

- See clear relationships between your variables

- Want to explain how different factors affect outcomes

Classification models for binary or categorical outcomes

Classification models sort data into distinct groups based on past patterns. These models figure out if observations fit into predefined categories, which makes them valuable tools in yes/no decisions or sorting items into multiple groups.

Binary classification tackles scenarios with two possible outcomes like spam/not spam or fraud/legitimate. Multi-class classification models such as Multinomial Regression step in when you’re dealing with multiple categories.

The most prominent classification algorithms include:

- Support Vector Machines (SVM): These create clear boundaries between categories

- K-Nearest Neighbors (KNN): Perfect for recommendations and grouping similar items

- Decision Trees: These show clear decision-making paths

- Naïve Bayes: This excels at text classification and sentiment analysis

Clustering models for segmentation

Clustering sets itself apart as an unsupervised technique. It finds natural groupings in data without preset categories by identifying similarities between data points.

K-means clustering splits data into k groups of equal variance and minimizes the within-cluster sum-of-squares. This helps identify customer segments, market patterns, or product categories based on natural similarities rather than preset rules.

Clustering models shine when you:

- Want to find hidden patterns in unlabeled data

- Need to segment customers or markets

- Look for natural groups to target strategically

Time series models for trend forecasting

Time series forecasting looks at data in chronological order to predict future values from past observations. These models spot patterns influenced by time elements like trends, seasonality, and cycles.

Research shows that time series forecasting helps predict weather patterns, economic indicators, healthcare trends, and retail demand. ARIMA (Autoregressive Integrated Moving Average) stands as one of the most accessible approaches to time-based predictions.

Anomaly detection models for outliers

Anomaly detection spots data points that substantially differ from the norm. This helps catch fraud, system failures, or unusual behavior. These models learn what “normal” looks like and flag anything that doesn’t fit the pattern.

Common methods include isolation forests that “isolate” observations through random feature selection and split values. Local Outlier Factor (LOF) compares a point’s local density to its neighbors.

Ensemble models for improved accuracy

Ensemble models merge multiple predictive models to achieve better accuracy than any single model could. They make use of information from various modeling approaches to reduce errors and strengthen generalizable signals.

The three main ensemble approaches are:

- Bagging (Bootstrap Aggregating): This creates multiple versions of the same model using different data subsets

- Boosting: Models build on each other, with new ones fixing previous errors

- Stacking: This uses predictions from multiple models to feed a meta-model that makes final predictions

Studies show that ensemble methods typically perform better than individual models in both accuracy and reliability. Therefore, they represent the most advanced approach when prediction quality is your main goal.

A Simple Framework to Choose the Right Model

A structured approach helps you navigate the world of predictive analytics and model selection. Here’s a practical framework that will help you choose the right predictive model based on your business needs.

Match model type to business question

Your specific business problem should determine the type of model you select. ML challenges fit into three main categories: regression problems that identify relationships between input features and continuous output variables, classification problems that sort data points into categories, and clustering problems that group similar data. The first step is to determine whether you need to predict numbers, categorize data, or find natural groups in your dataset.

Ask yourself these questions:

- Do you need to predict continuous values like price or sales figures? → Think over regression models

- Do you need to classify data into distinct categories? → Look at classification algorithms

- Do you want to segment your customer base? → Explore clustering techniques

- Do you need to analyze time-based patterns? → Break down time series models

Consider data size and structure

Your data’s volume and nature will shape your model selection. Small datasets work better with simpler models like linear regression or decision trees because they reduce overfitting risks. Large datasets allow you to use complex models like neural networks more effectively.

The complexity of your data matters too. Complex datasets need sophisticated models to capture their patterns. But be careful – models that are too complex can overfit, while oversimplified models might miss vital patterns in your data.

Evaluate interpretability vs. accuracy

The balance between interpretability and accuracy is one of your most important choices when picking a predictive model. More accurate models are usually harder to explain, and vice versa. This relationship is called the interpretability problem.

Regulated industries like banking, insurance, and healthcare need to understand the logic behind predictions. This understanding is often a legal requirement. These situations might need more interpretable models like decision trees or linear regression, even if they’re less accurate.

High-stakes situations, like medical diagnoses, need you to balance maximum accuracy with clear explanations. You can increase the interpretability of sophisticated algorithms without losing much accuracy by using surrogate models, variable importance measures, or permutation tests.

Use a model selection matrix

A selection matrix helps you assess potential models systematically. This approach lets you compare models across several key areas:

- Performance metrics: accuracy, precision, recall, F1 score for classification; RMSE for regression

- Computational efficiency: training time and resource requirements

- Explainability needs: how much you need to understand the “why” behind predictions

- Data compatibility: how well the model works with your data structure

Experimentation and evaluation are the best ways to select your prediction model. Test different models and compare their performance through cross-validation or on a validation set. Real-world testing often reveals things that theory alone cannot show.

How to Evaluate and Compare Predictive Models

The next significant step after picking potential models that fit your business needs involves testing their performance to find the best match for your specific case. A proper evaluation will give a reliable and accurate model that works well in real-life applications.

Use cross-validation and test sets

Data splitting between training and testing sets alone might not give you the full picture of model performance. Cross-validation is a great way to get a more thorough evaluation. This method systematically uses multiple chunks of your data to train and test. The technique helps you avoid being too optimistic or pessimistic about performance estimates.

K-fold cross-validation splits your dataset into k equal parts. The model trains k times, and each time a different part serves as the test set while the remaining k-1 parts become training data. The average results provide a more reliable performance assessment. Most data scientists use 10 folds – fewer folds lead to validation bias while more folds start to look like the leave-one-out method.

Key metrics: accuracy, precision, recall, RMSE

Your specific problem type determines which evaluation metrics you should pick:

For classification models:

- Accuracy shows how many cases were correctly classified overall, but watch out it can be misleading with imbalanced datasets

- Precision tells you how many positive classifications were actually right this matters when false positives can get pricey

- Recall (sensitivity) reveals how many actual positives you caught, this is vital when missing positive cases could be risky

- F1 score blends precision and recall, which helps when both matter equally

For regression models:

- Root Mean Squared Error (RMSE) hits larger errors harder than smaller ones

- Mean Absolute Error (MAE) gives you a clear view by measuring average size of errors without directional bias

- R-squared shows how much variance your model explains – higher numbers mean better fit

Avoid overfitting and underfitting

Finding the right balance between model complexity and knowing how to generalize remains the biggest problem in predictive modeling. Overfitting happens when your model learns the training data too well, including noise. This leads to great training scores but poor results with new data.

Underfitting occurs when models are too simple to catch important patterns. These models perform poorly on both training and testing data. You can spot these issues by looking at the gap between training and validation performance a big gap often points to overfitting, while poor scores on both suggest underfitting.

You can curb these problems by using regularization techniques, tweaking model complexity, trying ensemble methods, and most importantly, verifying your model with proper cross-validation. The goal is to find that sweet spot where your model catches real patterns without memorizing noise.

Deploying and Monitoring Your Predictive Model

A predictive model’s experience continues beyond selection and evaluation. Your model will give you business value through practical use and continuous improvement.

Integrate into business workflows

Predictive models work best when technology lines up with business goals. Your first step should be to identify specific, measurable business targets instead of chasing technology-first initiatives. You need standardized data schemas that handle data consistently and clear strategies to manage data lifecycle. Most organizations should test their architecture with pilot projects before they add streaming capabilities to existing workflows. This approach helps turn your predictions into practical business strategies.

Set up performance monitoring

Continuous monitoring matters because about 60% of deployed ML algorithms fail after pilot stage. They simply don’t know how to adapt to changing conditions. Your monitoring should track:

- Model performance metrics – accuracy, precision, recall for classification models; MAE, MSE, RMSE for regression models

- Data drift – changes in statistical properties of input data over time

- Prediction drift – changes in distribution of model outputs

Good monitoring warns you early about performance drops and shows why problems happen.

Plan for retraining with new data

Your model’s performance starts to decline as ground conditions change right after production deployment. You can retrain either at scheduled times or when performance falls below set limits. Continuous training updates models automatically when performance changes. This keeps them accurate despite changing conditions. Cross-validation should verify all retrained models before deployment.

Conclusion

Predictive modeling has become crucial to informed business success. This piece explores how picking the right predictive model affects your way to extract valuable insights from historical data. A structured approach guides you through what might seem like too many options.

Without doubt, everything starts with clear prediction goals before data preparation and feature selection. These basic steps determine how well any model will work, whatever its sophistication. On top of that, knowing the main types of predictive models regression, classification, clustering, time series, anomaly detection, and ensemble methods, helps match the right tool to your business question.

Our simple decision framework balances key factors in model selection. The original step lines up model type with your business question. Then you think over data size and structure. Next comes weighing interpretability against accuracy based on your industry’s needs and use case. A model selection matrix helps assess options systematically.

Note that evaluation continues after model selection. Cross-validation techniques and proper metrics confirm your model’s reliability with new data. Active prevention of overfitting and underfitting will give a model that captures real patterns instead of memorizing noise.

The best models need proper deployment and constant attention. Integrating predictive models into business workflows, setting up monitoring systems, and planning regular retraining with fresh data are foundations of long-term success.

Predictive modeling opens huge opportunities for organizations ready to put in the work. Though picking the right model seems tough at first, this systematic approach will improve your chances by a lot. You’ll build predictive models that deliver real business value and stay effective over time.

Key Takeaways

Master predictive model selection with this expert framework that transforms complex decisions into actionable steps for better business outcomes.

• Match your model to your business question first – Use regression for numerical predictions, classification for categories, and clustering for segmentation to ensure alignment with objectives.

• Balance interpretability with accuracy based on your industry needs – Regulated sectors like healthcare require explainable models, while others can prioritize performance over transparency.

• Prepare your data thoroughly before model selection – Clean data and proper feature selection consume 80% of project effort but directly determine model success.

• Use cross-validation and multiple metrics to evaluate performance – Avoid overfitting by testing models on unseen data and measuring accuracy, precision, recall, or RMSE as appropriate.

• Plan for continuous monitoring and retraining after deployment – 60% of ML models fail post-pilot due to changing conditions, making ongoing performance tracking essential.

The key to successful predictive modeling lies not in choosing the most sophisticated algorithm, but in systematically matching the right model to your specific business context, data characteristics, and performance requirements.

FAQs

Q1. What are the key factors to consider when choosing a predictive model? When selecting a predictive model, consider the nature of your target variable, computational performance requirements, dataset size, data separability, and the balance between bias and variance. Match the model type to your business question, evaluate interpretability vs. accuracy, and use a model selection matrix to compare options systematically.

Q2. What are the main types of predictive models and when should they be used? The main types include regression models for continuous outcomes, classification models for categorical outcomes, clustering models for segmentation, time series models for trend forecasting, and anomaly detection models for outliers. Choose based on your specific prediction goal – for example, use regression for numerical predictions and classification for categorizing data into distinct groups.

Q3. How can I evaluate the performance of my predictive model? Use cross-validation techniques to assess model performance more thoroughly. For classification models, consider metrics like accuracy, precision, recall, and F1 score. For regression models, use Root Mean Squared Error (RMSE), Mean Absolute Error (MAE), and R-squared. Always validate your model on unseen data to avoid overfitting.

Q4. What steps should I take before choosing a predictive model? Before selecting a model, clearly define your prediction goal, collect and clean historical data, and identify relevant features. Data preparation is crucial and typically consumes about 80% of a modeling project’s effort. Proper feature selection and engineering can significantly improve model performance.

Q5. How should I maintain my predictive model after deployment? After deployment, integrate the model into business workflows, set up continuous performance monitoring, and plan for regular retraining with new data. Monitor for model performance metrics, data drift, and prediction drift. Consider implementing either periodic retraining at scheduled intervals or trigger-based retraining when performance drops below set thresholds.